Review: Richard A. Clarke and R.P. Eddy’s “Warnings”

by Miles Raymer

Even for those who fastidiously avoid the news, to live in the modern world is to be bombarded with visions of catastrophe. Our culture, our politics, our language––these have all become saturated with promises of impending doom. The psychological result of this predicament is among the most nefarious consequences of the global media’s invasion of daily life, and contributes to incalculable suffering, most of it needless. But only a fool would deny the many legitimate threats darkening the human horizon. Given that cause for alarm is always available, how can we know when it is actually warranted?

Richard A. Clarke and R.P. Eddy’s Warnings attempts to answer this question by positing a method for distinguishing between real, serious threats and imagined or overblown ones. To do so, they invoke the myth of Cassandra, the princess of Troy who was blessed with supernatural foresight, but cursed because no one would believe her warnings about her beloved city’s demise at the hands of the Greeks. Cassandra has become a useful label for anyone who correctly predicts disaster but is tragically ignored. Cassandras can be contrasted with Chicken Littles––attention-seeking pissants who raise alarm needlessly. Clarke and Eddy’s goal is to help readers learn to tell the difference.

Warnings is split into two parts: “Missed Warnings” and “Current Warnings.” Missed Warnings examines a group of seven verified Cassandras (experts in various fields who saw disasters coming but were ignored), and Current Warnings presents a group of seven possible Cassandras (experts now pounding the table about disasters looming ahead). A transition chapter between parts presents the idea of a “Cassandra Coefficient”––Clarke and Eddy’s purportedly rigorous method for fishing true Cassandras from the ever-roiling sea of Chicken Littles.

Clarke and Eddy’s Missed Warnings are the easy ones; they stand on firm historical ground and their analysis doesn’t require guessing about future events. These chapters analyze a variety of recent disasters that could have been prevented or mitigated, and explore the reasons why more wasn’t done to ease the blow. Clarke and Eddy convey these stories like thrillers, each anchored by an energetic but frustrated hero trying to get the world to wake up and smell the chaos. Displaying a true talent for high-octane nonfiction, Clarke and Eddy sharply outline each Cassandra’s struggle to bend an unwilling world to his or her will, and coldly calculate the causes of each failure. The topics are: The invasion of Kuwait, Hurricane Katrina, the rise of Isis, the Fukushima Nuclear Disaster, Bernie Madoff’s ponzi scheme, a mine collapse in West Virginia, and the 2008 Recession.

Warnings is full of obscure but useful terms that demonstrate the complexity of the world’s resistance to Cassandras. Perhaps the most important of these is “Initial Occurrence Syndrome,” the assumption that “if a phenomenon had never happened before, it never would” (34). IOS is “a special case of availability bias, one that is more difficult to overcome because of the complete lack of precedent that would allow our brains to estimate the likelihood of such an event occurring” (35). Clarke and Eddy stress that while we should always allow data and evidence to drive our predictions, it is never safe to conclude that unprecedented events will not happen. Time and again, Cassandras are waived off by businesspeople and/or government officials who claim that we shouldn’t worry about things that have never happened.

There is plenty of other noise drowning out the cries of Cassandras. Here are some terms I found particularly edifying:

- Scientific reticence: “A reluctance to make a judgment in the absence of perfect and complete data.” (79)

- Satisficing: “When a decision maker addresses the issue but doesn’t solve the actual problem.” (116)

- Complexity mismatch: “Some decision makers are uncomfortable with the warning, in part because of its complexity and also because their lack of expertise may highlight their own inadequacies and make them dependent upon someone whose skills they cannot easily judge.” (178)

These are just a few of the many interesting ways that Clarke and Eddy explain why individuals and institutions are not better at heeding Cassandras, even when evidence is readily available and, in some cases, irrefutable. The most insidious aspect of these dynamics is that they don’t typically result from intentional negligence or malice, but rather from the inherent shortcomings of human psychology.

The chapters on Missed Warnings recount repeated failures of institutional systems that are nominally but not functionally meritocratic. In the case of the invasion of Kuwait, the Cassandra was a US Intelligence operative empowered with a special ability to send an official “Warning of War” directly to President Bush’s fax machine. After choosing to exercise this power for the first time in his long and august career, he was promptly ignored by the White House (27). Thirteen months before Hurricane Katrina decimated New Orleans, FEMA conducted a simulation of a similar hurricane that predicted catastrophic damage and death, but nothing was done to strengthen the aging levies or prepare the city for the worst (39-40). One Cassandra saw through Bernie Madoff’s ponzie scheme long before it ruined the lives of Madoff’s many investors, but it didn’t matter because Security and Exchange Commission officials couldn’t understand the financial details of the information he presented to them (116).

Those are the examples I found most upsetting, but all of Clarke and Eddy’s case studies contain similar outrages; the information is almost always available, but the folks in charge just won’t hear the message. It would appear that humans are actually quite good at setting up systems for analyzing potential disasters, but terrible at utilizing those systems when it counts. The norm is to discredit or ignore Cassandras when responses to their warnings will be expensive and/or politically contentious, which is almost always the case.

Another important message is that communication styles matter––a lot. Many vindicated Cassandras failed in their missions not because they couldn’t get the ears of the right people, but because they came off as alarmist, haughty or aloof. I largely blame the decision makers for using something like demeanor as an excuse for denying hard evidence, but current and future Cassandras can and should learn from these examples. Anyone seeking to head off a disaster should find a way sugarcoat the message without weakening it, if at all possible.

My one criticism of Missed Warnings is that I think Clarke and Eddy overlooked an opportunity to distinguish between two kinds of disasters that seem quite different to me: disasters that result primarily from nature/physics, and ones that result primarily from human action. Setting aside the fact that there is no principled distinction between the two (human action is still a result of nature/physics), I do think it is important to come down hard on those who fail to respond to disasters that are physically predictable (hurricanes, earthquakes, etc.), and also to give a bit of a break to those who don’t see human-caused disasters coming (wars, financial collapses, etc.). Disasters always seem inevitable in hindsight, but before they strike, it seems much easier to be sure about something like an earthquake since it is not an issue of “if” but “when” it will occur. A particular invasion or formation of a new militant group, however, may genuinely never occur and is therefore more difficult to plan for.

Before moving on to Current Warnings, Clarke and Eddy present their proposed mechanism for identifying contemporary Cassandras: the “Cassandra Coefficient”:

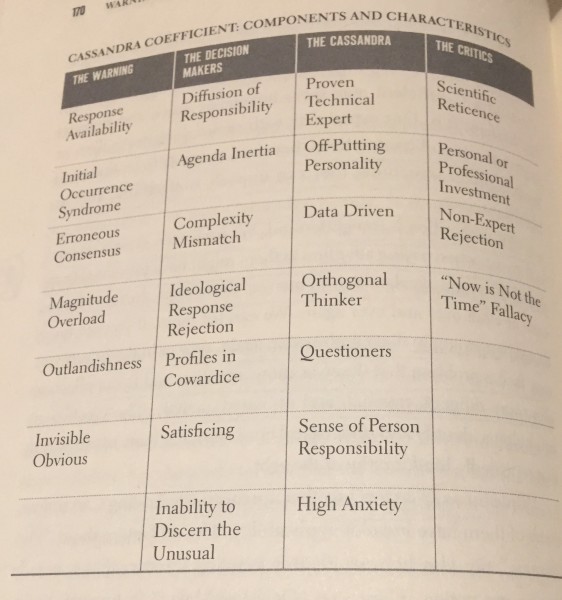

It is a simple series of questions derived from our observation of past Cassandra Events. It involves four components: (1) the warning, the threat, or risk in question, (2) the decision makers or audience who must react, (3) the predictor or possible Cassandra, and (4) the critics who disparage or reject the warning. For each of the four components, we have several characteristics, which we have seen appear frequently in connection with past Cassandra events. (168, emphasis theirs)

The Cassandra Coefficient is by all accounts a great concept––one that I hope becomes common parlance in our discussions of how to frame the future of humanity. Clarke and Eddy put forth a rigorous set of terms that fills out the profile for each component, as shown here:

Grids like this might send some readers scurrying for lighter reading, but I was delighted to receive precise definitions for each component and characteristic. Unfortunately, the application of these terms in subsequent chapters proved far less robust than Clarke and Eddy led me to expect.

The best part of the Cassandra Coefficient is that it fully accepts the modern reality that humans have created and are now embedded in communal, national, and global systems that are far too complex for any one person or organization to comprehend:

Systems can be so complex that even experts can’t see the disaster looming within. Complexity mismatch is a looming threat for government. For the first time, technologists are now building machines that make decisions with rationale that even the creators don’t fully understand. The accelerating growth of technology makes it increasingly difficult for scientists, let alone bureaucrats, to decipher the risks…Increasingly, we are operating or planning systems, software, or networks that no one person understands. It takes a team, one of many diverse talents. That team, however, is sometimes so large that it cannot be assembled in a conference room auditorium, or even in a stadium. (178-9)

Clarke and Eddy hit hard when it comes to the challenge of complexity, and yet I do not think they go far enough. I personally do not believe that any team of humans––no matter how numerous, experienced, or well-educated––can fully evaluate or analyze hypercomplex systems such as the global climate or the Internet. We will need artificial intelligence, or perhaps even artificial superintelligence, to grok these systems on our behalf (provided we can coax them into caring about us). Clarke and Eddy address AI in the Current Warnings section, but sadly do not seem to take seriously the possibility that AI may be human civilization’s only remaining route to sustained prosperity, even if that also means risking extinction.

On the whole, the Current Warnings section is less successful than Missed Warnings. The topics are: artificial intelligence, pandemic disease, seal-level rise, nuclear winter, the Internet of Everything, meteor strikes, and gene editing. These chapters are all interesting and informative, but Clarke and Eddy fail to cash in on the promise of the Cassandra Coefficient, mostly by watering it down to the point where one wonders why they bothered to flesh it out so thoroughly in the first place. The chapters end with cursory and inconsistent explanations of how the Coefficient applies to each situation. Clarke and Eddy also provide a grid showing a Low, Moderate, or High score of each of the Coefficient’s four components, but give little explanation for how those scores were reached. These casual applications belie the meticulous methodology that made the chapter introducing the Cassandra Coefficient so engaging. I was left musing about whether Clarke and Eddy ever decided what kind of book they wanted to write. Or perhaps their careful analyses were diluted by editors seeking to appeal to a wider audience. Whatever the case, the overall impact of Warnings suffers significantly.

Despite this critical failing, there are some terrific and terrifying insights here. By far the most distressing chapter is the one addressing sea-level rise, which a highly-credible Cassandra predicts will occur at a much swifter rate than most scientists are currently willing to admit. Given the world’s continued reluctance to take the climate bull by the horns in these crucial years, I find it highly probable that human civilization as we know it will not exist by 2100. Something much better than what we have now may emerge from the chaos, but we appear to be in for at least a century or two of wretched turmoil that only the very wealthiest humans will have any chance of weathering unscathed.

Clarke and Eddy are careful to eschew relinquishment as a viable method of avoiding or escaping disasters. Although some of their possible Cassandras naively posit relinquishment of modern technologies as a path forward (279), Clarke and Eddy generally come off as followers of the proactionary principle: “If we wait for only perfect and precise information, we court disaster” (234). Even if relinquishment would create a better world, this course of action is neither historically validated nor aligned with human nature. For better or worse, humanity must press on, relying on our powers of innovation, creativity, and ambition. Such striving need not be unethical, but ethics is not required for survival.

Clarke and Eddy wrap up with a plangent call for a National Warning Office, which they think ought to be run by the US government’s executive branch:

This small, elite team should not be…part of the intelligence community, although it could task intelligence agencies to collect and analyze information. Rather, the office would have a broad, even intentionally vague, mandate to look across all departmental boundaries for new and emerging threats. The office should not address ongoing, chronic problems, such as obesity. Rather, the focus should be on possible impending disasters that are not being addressed by any part of government. (356)

Present leadership notwithstanding, this is a laudable idea. Couple this with advanced learning algorithms designed to pinpoint global weaknesses and engineer broad-ranging solutions, and we might have a much better shot at avoiding future catastrophes.

But let’s be real. Without exception, the process of obviating disasters before they occur is difficult and expensive. Today’s global leadership appears profoundly uninterested in tackling difficult and expensive problems. Even worse, the biggest beneficiaries of such action tend to be poor, vulnerable populations––those routinely ignored by elites with the power to create positive change. Warnings doesn’t leave me with much hope; rather, I now have a much clearer idea of why and how humanity’s future might reflect––or even surpass––the worst nightmares of Clarke and Eddy’s possible Cassandras.

Rating: 7/10

such an uplifting conclusion! you should stop hanging around your dad.